Vol: 2 • Issue: 4 • April 2012 [archives] ![]()

Barnstars work; Wiktionary assessed; cleanup tags counted; finding expert admins; discussion peaks; Wikipedia citations in academic publications; and more

With contributions by: Lambiam, Piotrus, Jodi.a.schneider, Amir E. Aharoni, DarTar, Tbayer, Steven Walling, Junkie.dolphin and Protonk

Recognition may sustain user participation

To gain insight in what makes Wikipedia tick, two researchers from the Sociology Department at Stony Brook University conducted an experiment with barnstars.[1] They were surprised by what they found.

Professor Arnout van de Rijt and graduate student Michael Restivo wanted to test the hypothesis according to which receiving recognition for one’s work in an informal peer-based environment such as Wikipedia has a positive effect on productivity. To test their hypothesis, they determined the top 1% most productive English Wikipedia users among the currently active editors who had yet to receive their first barnstar. From that group they took a random sample of 200 users. Then they randomly split the sample into an experimental group and a control group, each consisting of 100 users. They awarded a barnstar to each user in the experimental group; the users in the control group were not given a barnstar. The researchers found their hypothesis confirmed: the productivity of the users in the experimental group was significantly higher than that of the control group. What really took the researchers by surprise was how long-lasting the effect was. They followed the two groups for 90 days, observing that the increase in contribution level for the group of barnstar recipients persisted, almost unabated, for the full observation period.

One major factor the experiment did not take into account was whether it mattered who delivered barnstars and whether they were anonymous, registered, or known members of the Wikipedia community. During the experiment, it was noted on the Administrator’s noticeboard/Incidents page that a seemingly random IP editor was “handing out barnstars”, which led to some suspicion from Wikipedians. The thread was closed after User:Mike Restivo confirmed he accidentally logged out when delivering the barnstars. He did not, however, declare his status as a researcher, and the group’s paper does not disclose that the behavior was considered unusual enough to warrant such a discussion thread.

Can Wiktionary rival traditional lexicons?

A chapter titled “Wiktionary: a new rival for expert-built lexicons?”[2] in a collection on electronic lexicography to appear with Oxford University Press contains a description and critical assessment of Wikipedia’s second oldest sister project (which will celebrate its 10th anniversary in December this year) – subtitled “Exploring the possibilities of collaborative lexicography”, which it calls a “fundamentally new paradigm for compiling lexicons”.

The article describes in detail the technical and community features of Wiktionary. Though it is not immediately clear, the article’s focus is on several language editions and not just English (as often happens in research about Wikipedia and its sister projects). The article gives a comprehensive account of the coverage of the world’s languages by the various Wiktionary language editions. There is a critical analysis of Wiktionary’s content, first with what appears to be a thorough statistical comparison with other dictionaries and wordnets, including an examination of the overlaps in the lexemes covered, which the authors found to be surprisingly small.

| Number of native terms (p.17) | Wiktionary | wordnets | Roget’s Thesaurus | OpenThesaurus |

|---|---|---|---|---|

| English language | 352,865 | 148,730 (WordNet) | 59,391 | |

| German language | 83,399 | 85,211 (GermaNet) | 58,208 | |

| Russian language | 133,435 | 130,062 (Russian WordNet) |

The article notes an important characteristic of open wiki projects: they allow “updating of the lexicons immediately, without being restricted to certain release cycles as is the case for expert-built lexicons” (p. 18). Though this characteristic is obvious to experienced Wikimedians, it is frequently overlooked. The discussion of the organization of polysemy and homonymy is comprehensive, although limited to the English Wiktionary. Other language editions may do it differently. The article notes that “it is a serious problem to distinguish well-crafted entries from those that need substantial revision by the community”, which is good constructive criticism. The paragraphs about “sense ordering” make some vague claims (e.g. “Although there is no specific guideline for the sense ordering in Wiktionary, we observed that the first entry is often the most frequently used one”) which could be interesting and useful from a community perspective, but offers little actionable evidence and should be investigated further. The paper’s conclusions identify some of the features that enable Wiktionary to rival expert-built lexicons: “We believe that its unique structure and collaboratively constructed contents are particularly useful for a wide range of dictionary users”, listing eight such groups – among them “Laypeople who want to quickly look up the definition of an unknown term or search for a forum to ask a question on a certain usage or meaning.”

On a critical note, the last paragraph says “we believe that collaborative lexicography will not replace traditional lexicographic theories, but will provide a different viewpoint that can improve and contribute to the lexicography of the future. Thus, Wiktionary is a rival to expert-built lexicons – no more, no less”, which sounds a bit contradictory. The authors also note that “Lepore (2006: 87) raised a criticism about the large-scale import of lexicon entries from copyright-expired dictionaries such as Webster’s New International Dictionary”. It would be nice if the authors would write at least a short explanation of the problem that Lepore described. But the actual article[3] mentions Wiktionary only very briefly. For the most part, the article is a good academic-grade presentation of Wiktionary: it is very general and does not dive too much into details; it makes a few vague statements, but they present a good starting point for further research.

Wikipedia as an academic publisher?

Xiao and Askin (2012) looked at whether academic papers could be published on Wikipedia.[4] The paper compares the publishing process on Wikipedia to that of an open-access journal, concluding that Wikipedia’s model of publishing research seems superior, particularly in terms of publicity, cost and timeliness.

The biggest challenges for academic contributions to Wikipedia, they found, revolve around the level of acceptance of Wikipedia in academia, poor integration with academic databases, and technical and conceptual differences between an academic article and an encyclopedic one. However, the paper suffers from several problems. It correctly observes that the closest a Wikipedia article comes to a “final”, fully peer-reviewed status is after having passed the featured article candidate process, but makes no mention of intermediary steps in Wikipedia’s assessment project, such as B-class, Good Article and A-class reviews; nor is the the assessment project itself mentioned. Despite its focus on the featured-article process, no previous academic work on featured articles is cited (although quite a few have been published). Crucially, the paper disregards the most relevant of Wikipedia’s policies, no original research. Thus, the study fails to consider whether Wikipedia would want to publish academic articles without their undergoing changes to bring them closer to encyclopedic style – a topic that already has become an issue numerous times on the site, in particular regarding difficulties encountered by some educational projects. In the end, the paper, while a well-intentioned piece, seems to illustrate that university researchers can have a quite different understanding of what Wikipedia is than those more closely connected with the project.

In other news, however, a scientific journal appears to have found a viable way to publish peer-reviewed articles on Wikipedia: The open access journal PLoS Computational Biology has announced[5] that it is starting to publish “Topic Pages” – peer-reviewed texts about specific topics, which are published both in the journal and as a new article on Wikipedia. It is hoped that the Wikipedia versions will be updated and improved by the Wikipedia community. The first example is about circular permutation in proteins.

Wikipedia citations in American law reviews

The article “A Jester’s Promenade: Citations to Wikipedia in Law Reviews , 2002–2008” concerns the issue of citations of Wikipedia in US law reviews and the appropriateness of this practice.[6] The article seems to be well researched, and its author, law reference/research librarian Daniel J. Baker, demonstrates familiarity with the mechanics of Wikipedia (such as the permanent links). For the period 2002–08, Baker identified 1540 law-review articles that contain at least one citation of Wikipedia – most in law reviews dealing with general and “popular” subject matter, with a significant proportion originating from authors with academic credentials.

The article notes that 2006 marked the peak of that trend, attributing it (thereby demonstrating some familiarity with Wikipedia’s history) to a delayed reaction to the Seigenthaler incident and the Essjay Controversy. (Since the article’s data analysis ends in 2008, the question of whether this trend has rebounded in recent years is left unanswered.)

The author is highly critical of Wikipedia’s reliability, arguing that a source that “anyone can edit” – and where much of the information is not verified – should not be used in works that may influence legal decisions. Thus Baker calls for stricter rules in legal publishing, in particular that Wikipedia should not be cited. In a more surprising argument, the paper suggests that if information exists on Wikipedia, it should be treated as common knowledge, and thus does not require referencing (a recommendation that follows a 2009 one – Brett Deforest Maxfield, “Ethics, politics and securities law: how unethical people are using politics to undermine the integrity of our courts and financial markets”, 35 OHIO N.U. L. REV. 243, 293 (2009)). This argument does, however, raise the question of whether no citation at all is truly better than a citation to Wikipedia; if such a recommendation were followed, it could lead to a proliferation of uncited claims in law review journals that would be assumed (without any verification) to rely on “common knowledge” as represented in the “do not cite” Wikipedia.

One in four of articles tagged as flawed, most often for verifiability issues

A paper titled “A Breakdown of Quality Flaws in Wikipedia”[7] examines cleanup tags on the English Wikipedia (using a January 2011 dump), finding that 27.53% of articles are tagged with at least one of altogether 388 different cleanup templates. In a 2011 conference poster [8] (a version of which was summarized in an earlier edition of this newsletter), the authors analyzed – together with a third collaborator – a 2010 dump of the English Wikipedia for a smaller set of tags, arriving at much lower ratio: “8.52% [of articles] have been tagged to contain at least one of the 70 flaws”. Using a classification of Wikipedia articles into 24 overlapping topic areas (derived from Category:Main topic classifications), the highest ratio of tagged articles were found in the “Computers” (48.51%), “Belief” (46.33%) and “Business” (39.99%) topics; the lowest were in “Geography” (19.83%), “Agriculture” (22.57%) and “Nature” (23.93%). Of the 388 tags on the more complete list, “307 refer to an article as a whole and 81 to a particular text fragment”. As another original contribution of the paper, the authors offer an organization of the existing cleanup tags into “12 general flaw types” – the most frequent being “Verifiability” (19.46% of articles have been tagged with one of the corresponding templates), “Wiki tech” (e.g. the “orphan”, “wikify” or “uncategorized” templates; 5.47% of articles) and “General cleanup” (2.01%).

Time evolution of Wikipedia discussions

Kaltenbrunner and Laniado look at the time evolution of Wikipedia discussions, and how it correlates to editing activity, based on 9.4 million comments from the March 12, 2010 dump.[9] Peaks in commenting and peaks in editing often co-occur (for sufficiently large peaks of 20 comments, 63% of the time) within two days. They show the articles with the longest comment peaks and most edit peaks, and the 20 slowest and 20 fastest discussions.

The authors note that a single, heavy editor can be responsible for edit peaks but not comment peaks; peaks in the discussion activity seem to indicate more widespread interest by multiple people. They find that “the fastest growing discussions are more likely to have long lasting edit peaks” and that some editing peaks are associated with event anniversaries. They use the Barack Obama article as a case study, showing peaks in comments and editing due to news events as well as to internal Wikipedia events (such as an editor poll or article protection). Current events are often edited and discussed in nearly real-time in contrast to articles about historical or scientific facts.

They use the h-index to assess the complexity of a discussion, and they chart the growth rate of the discussions. For instance, they find that the discussion pages of the three most recent US Presidents show a constant growth in complexity but that the rate of growth varies: Bill Clinton‘s talk page took 332 days to increase h-index by one, while George W. Bush‘s took only 71 days.

They envision more sophisticated algorithms showing the relative growth in edits and discussions. Their ideas for future work are intriguing – for instance, the question of how to determine article maturity and the level of consensus, based on the network dynamics. (AcaWiki summary)

APWeb2012 papers on admin networks, mitigating language bias and finding “minority information”

Several of the accepted papers of this month’s Asia-Pacific Web Conference APWeb2012 concerned Wikipedia:

- Prototype tool searches for expert admins: In the article “Exploration and Visualization of Administrator Network in Wikipedia”,[10] four Chinese authors examine the collaboration graph of administrators on the English Wikipedia (where two of them are connected by an edge if they have edited the same article during the sampled time span from January 2010 to January 2011), and “define six features to reflect the characteristics of administrator’s work from different respects including diversity of the admin user interests, the influence & importance across the network, and longevity & activity in terms of contribution.” The authors observe that the recognition of an admin’s work by other users in the form of barnstars seems to agree with the overall rank they calculate from these quantities: “By analyzing the profiles of the top ranked fifty admin users as a test case, it has been observed that the number of barn stars received by them also follows the similar trend as we overall ranked the admin users.”To extract topics from an admin’s history and define diversity, Latent Dirichlet allocation (LDA) is used. The authors describe a prototype software called “Administrator Exploration Prototype System”, which displays these various quantitative measures for an admin and allows ranking them. In particular, it “will automatically find the expert authors based on the editing history of each admin user”. An example screenshot shows a list of results for a “Search for “Expert Admin User” for the keywords “Music, Songs, Singers”, topped by Michig, Mike Selinker and Bearcat. Analyzing the whole network, the authors find a “decreasing trend of the clustering coefficient [which] can also be seen as a symptom of the growing centralization of the network.” Overall, they observe that “the administrator network is a healthy small world community having a small average distances and a strong centralization of the network around some hubs/stars is observed. This shows a considerable nucleus of very active administrators who seems to be omnipresent.”

-

Detecting “minority information” on Wikipedia: A paper[11] by two Japanese researchers proposes “a method of searching for minority information that is less-acknowledged and has less popularity in Wikipedia” for a given keyword. “For example, if the user inputs ‘football’ as a majority information keyword, then the system seeks articles having a sentence of “….looks like football….” or similar content of articles about soccer in Wikipedia. It extracts as candidates for minority sports those articles which have few edits and few editors. Then, it performs sports filtering and extracts minority articles from the candidates. In this case, the results are ‘Bandy’, ‘Goalball’, and ‘Cuju’.” The authors constructed a prototype system and tested it.

- Completing Wikipedia articles with information from other language versions: In an article titled “Extracting Difference Information from Multilingual Wikipedia”[12], four Japanese researchers describe a “method for extracting information which exists in one language version [of Wikipedia], but which does not exist in another language version. Our method specifically examines the link graph of Wikipedia and structure of an article of Wikipedia. Then we extract comparison target articles of Wikipedia using our proposed degree of relevance.” As motivating example, they note that the English Wikipedia’s coverage of the game cricket is much fuller than the Japanese Wikipedia’s, but spread over separate articles beyond just the main one at cricket. The goal is a system where a (Japanese) user can enter a keyword and will receive the “Japanese article with sections of English articles that do not appear in the Japanese article”.

Briefly

-

Unchanged quality of new user contributions over time. GroupLens PhD candidate Aaron Halfaker (who also collaborates with the Wikimedia Foundation as a contractor research analyst) shared some preliminary results on the quality of new user contributions,[13] part of a larger study currently submitted for publication. The results, based on an analysis of revert rates in the English Wikipedia combined with blind assessment of a new editor contribution history, indicate that new editors have produced the same level of quality in their first contributions since 2006. Despite the fact that “the majority of new editors are not out to obviously harm the encyclopedia (~80 percent), and many of them are leaving valuable contributions to the project in their first editing session (~40 percent)”, today’s user experience for a first-time editor is much more hostile than it used to be, as “the rate of rejection of all good-faith new editors’ first contributions has been rising steadily, and, accordingly, retention rates have fallen. These results challenge the hypothesis that today’s newbies produce much lower quality contributions than in earlier years.

- Modeling Wikipedia’s community formation processes. An important factor behind the success of Wikipedia is its own internal culture. Like any social group, a community of peer production has its own rules, norms, and customs. Unlike traditional social groups — a recently-defended doctoral dissertation in computer science argues — the process of formation of these traits involves, and often determines, how contents are being produced. The dissertation, defended by former Summer of Research fellow and Wikimedia Foundation contractor analyst Giovanni Luca Ciampaglia,[14] uses computer simulation to study how the community of Wikipedia may have formed its specific cultural traits and distinctive sociological features. Starting from the distribution of user account lifespan in five of the largest Wikipedia communities (English, German, Italian, French, and Portuguese) this work shows how the statistical patterns of the data can be reproduced by a simple model of cultural formation based on principles taken from self-categorization theory and social judgment theory.

The research finds that an important factor to determine whether a community will be able to sustain itself and thrive is the degree of openness of individual users towards differing points of view, which may be critical in the early stages of user participation, when a newcomer first enters in contact with the body of social norms that the community has devised. The thesis concludes that simulation techniques, when supplemented with empirical methods and quantitative calibration, may become an important tool for conducting sociological studies.

- Matching reader feedback via the Article Feedback Tool to editor peer review: An upcoming presentation at Wikimania 2012[15] compares data gathered from the Article Feedback Tool (AFT) version 4 on the English Wikipedia over summer 2011 to ratings assigned by various peer review processes, e.g. good and featured articles. As might be expected, articles at any point in the peer review process tend to be rated more highly by reviewers, but this distinction is highly sensitive to the article length. Once length is accounted for (using a variety of methods), the differences between demoted or not promoted articles and unrated articles disappears. The research also offers a broad snapshot of the AFT dataset as well as some suggestions for future AFT design. Future revisions of the draft as well as the presentation will approach the dynamic relationship between peer reviewed status and reader feedback, exploiting entry and exit into various categories for identification.

- Referencing of Wikipedia in academic works is continuing unabated: An article in the “Research Trends” newsletter published by the bibliographical database Scopus, titled “The influence of free encyclopedias on science”[16] charts the number of papers in Scopus that are either about Wikipedia or cite it. Considering that Wikipedia was only founded in 2001 (i.e. that these numbers have necessarily started from zero right before the observed timespan), the author’s astonishment at the compound annual growth rates for both kinds of papers from 2002 to 2011 (which she calls “staggering” and “unbelievable”, respectively) is somewhat surprising, but the article also gives the growth rates for the five years from 2007 to 2011 (ca. 19% per year for Wikipedia as a subject, ca. 31% per year for Wikipedia as a reference). Interestingly, Scholarpedia is showing itself to be the second most popular online encyclopedia to be cited, if lagging significantly behind Wikipedia (5%).

- Using Wikipedia to drive traffic to library collections: In an article titled “Wikipedia Lover, Not a Hater: Harnessing Wikipedia to Increase the Discoverability of Library Resources”[17] in the Journal of Web Librarianship, two librarians from the University of Houston Libraries and a former intern report how they had successfully used Wikipedia to drive traffic to the collection of the institution’s digital services department (UHDS), proceeding from merely inserting links into articles to uploading images from the collection to Commons (which still contain such link on the file description pages): “Originally, UHDS intended to contribute exclusively to the External Links section of existing Wikipedia articles. [However, over time] UHDS staff found it was much more effective to match digital items with Wikipedia articles and to share those items in Wikimedia Commons (WMC) rather than (or in addition to) the External Links section of the articles.” While few statistics are given, the authors emphasize the effectiveness of their actions, observed already for the very first attempts: “Within hours of posting external links to existing Wikipedia articles, the digital library received hits to those collections at a surprisingly high rate.” As an example of an article enriched with such images, the entry 1915 Galveston hurricane is named. Among the successful additions to external links section is the article about former US president George H. W. Bush, where the student intern linked a photograph showing Bush shaking hands with former University of Houston chancellor Philip G. Hoffman (as already noted in the Signpost’s April 2011 coverage after the authors had presented their project at the annual meeting of the Association of College and Research Libraries: “Experts and GLAMs – contributing content or ‘just’ links to Wikipedia?“). Much of the paper describes basic technicalities of Wikipedia: The uploading of image, the use of contributions lists, talk pages and watchlists. While Wikipedia’s external links guidelines are not cited in the paper, it notes that “contributing effectively to Wikipedia and WMC entailed a steep learning curve in order to align contributions with the granular and well-enforced Wikipedia guidelines for use”, and among them notices policies against advertising. As one unresolved problem for such institutional usage of Wikipedia and Commons, the paper describes the prohibition “to share an editor username with other editors, and [that] organizational usernames are considered a violation of Wikipedia guidelines forbidding the promotion of organizations. When the pilot project transitioned into a permanent departmental program, UHDS staff struggled to devise a way that others on staff could continue to monitor previous edits and uploads and create new ones”, e.g. due to the lack of shared watchlists.

- Weekly and daily activity patterns discern Wikipedia from commercial sites: Two Finnish researchers analyzed[18] the distribution of timestamps in the recent changes RSS feed from four different language versions (Arabic, Finnish, Korean, and Swedish – Arabic having been chosen because its speakers are spread over “a very wide range of timezones”, in contrast to the other three), and RSS new feeds from BBC World News “and the leading Finnish daily newspaper Helsingin Sanomat“. As the main difference between the activity on those two sites (which the authors describe as “commercial news sites”) and on the Wikipedias, it was found that Wikipedia edits “distribute fairly equally over all days in all cases. The drop of activity on weekends that occurred with the commercial news services is not visible in the Wikipedias, quite the opposite, with Sundays typically seeing the highest average level of activity. Only the Arabic version has a slightly lower activity rate in Sundays, however, we should remember the fact that in Arabic countries the weekend falls on Friday-Saturday or in some countries on Thursday-Friday”. The diurnal patterns are found to be “more spread out” on Wikipedia, where “the activity levels follow natural diurnal rhythms. Interestingly, a great number of changes are made during working hours, which leads us to 2 different, but not mutually exclusive, conjectures about the people who edit Wikipedia. Either, the editors are people with “free” time during the day, e.g., students, or people actually edit Wikipedia during the working hours at work. Our methodology is not able to answer this question”.

Furthermore, the authors offer a rather far-reaching but (if proven) significant conjecture based on their date: “Cultural and geographical differences in the Wikipedias we studied seemed to have very little effect on the level of activity. This leads us to speculate that the ‘trait’ of editing Wikipedia is something to which individuals are drawn, not something specific to certain cultures.”

Last year, papers by two other teams (covered in the September issue of this newsletter: Wikipedians’ weekends in international comparison“, but missing from the “Related work” section of the present paper) had similarly examined daily and weekly patterns on Wikipedia, coming to other results – in particular, different language Wikipedias showed different weekly patterns. - Simple English Wikipedia is only partially simpler/controversy reduces complexity: “A practical approach to language complexity: a Wikipedia case study”[19] analyzed samples of articles from the English Wikipedia and the Simple English Wikipedia from the end of 2010 with respect to the Gunning fog index as well as other measures for language complexity. Comparing them with other corpora including Charles Dickens‘ books, they observe that “Remarkably, the fog index of Simple English Wikipedia is higher than that of Dickens, whose writing style is sophisticated but doesn’t rely on the use of longer latinate words which are hard to avoid in an encyclopedia. The British National Corpus, which is a reasonable approximation to what we would want to think of as ‘English in general’ is a third of the way between Simple and Main, demonstrating the accomplishments of Simple editors, who pushed Simple half as much below average complexity as the encyclopedia genre pushes Main above it.” However, the number of distinct tokens used (a measure for vocabulary richness) is almost the same on the English and Simple Wikipedia (the samples were chosen to be of the same size). Still “detailed analysis of longer units (n-grams rather than words alone) shows that the language of Simple is indeed less complex”. In another finding, the authors “investigate the relation between conflict and language complexity by analysing the content of the talk pages associated to controversial and peacefully developing articles, concluding that controversy has the effect of reducing language complexity.”

- Contributions from South America. “Mapping Wikipedia edits from South America”,[20] the latest from a series of studies and visualizations by Oxford Internet Institute researcher Mark Graham and his team, reports that almost half of all edits to Wikipedia from South America come from Brazil, which is unsurprising considering that the largest population of Internet users in South America lives in Brazil. More interestingly, Chile –- a country with only 5-6% of the continent’s Internet population — contributes more than 12% of edits to Wikipedia.

- Deaths generate edit bursts: A student paper titled “Death and Change Tracking : Wikipedia Edit Bursts”[21] examines the editing activity in nine articles about celebrity actors on the English Wikipedia after they died.

- Searching by example: This month’s WWW 2012 conference in Lyon, France saw a demo titled “SWiPE: Searching Wikipedia by Example”[22], showcasing a tool where the user can search for articles similar to a given one by modifying entries in that article’s infobox, and also ask questions in natural language.

- Wikipedia in the eyes of PR professionals. A study published in the journal of the Public Relations Society of America (PRSA)[23] surveyed public relations and communications professionals about their perception of Wikipedia contribution and conflict of interest. The online survey was pilot-tested with members of the Corporate Representatives for Ethical Wikipedia Engagement (many of whom have recently pushed for Wikipedia to let PR professionals edit articles about their clients to a greater extent) and produced 1,284 usable responses after being disseminated via various outlets. The results indicate that “of the 35% who had engaged with Wikipedia, most did so by making edits directly on the Wikipedia articles of their companies or clients”. The response time to issues reported on talk pages was found to be one of the important barriers in the interaction between Wikipedia community members and PR professionals. The author observes that “when the wait becomes too long, the content is defamatory, or a dispute with a Wikipedian needs to be elevated, there are resources to help. Unfortunately, only a small percentage of the respondents in this study had used them and many had never heard of these resources”. As another argument against the “bright line” rule advocated by Wikipedia’s Jimmy Wales (which says that PR professionals should not edit Wikipedia articles they are involved in), a separate result of the paper has been offered, which has met with heavy criticism by Wikimedians regarding statistical biases and other issues (see e.g. last week’s Signpost coverage: “Spin doctors spin Jimmy’s ‘bright line’“): 32% of the respondents said that “there are currently factual errors on their company or client’s Wikipedia articles”, corresponding to 41% of those respondents who said that such articles existed, or 60% of those respondents who said that such articles existed but did not reply “don’t know” to that question. The press releases of the author’s college and of PRSA interpreted the result as “Sixty percent of Wikipedia articles about companies contain factual errors”, although the latter was updated after the criticism “to clarify the survey findings described in this press release and help prevent any misinterpretation of the data that this release may have caused”.

- Wikipedia coverage of marketing terms found accurate: The proceedings of the recent “International Collegiate Conference Faculty” of the American Marketing Association (AMA) offer a more positive view on Wikipedia from PR professionals: “Is Wikipedia A Reliable Tool for Marketing Educators and Students? A Surprising Heck Yes!”.[24] The paper chose a more systematic way to examine the quality of Wikipedia articles than the PRSA study and focused on AMA’s area of expertise, starting out from a “random sample of marketing glossary terms [that] were collected from 3 marketing management textbooks and 4 marketing principles textbooks”, and rating corresponding Wikipedia entries from 1 to 3 according to a standard procedure for content analysis: “Each textbook definition was compared to the corresponding Wikipedia definition and rated using a 3-point Likert scale where 1=Correct Definition, 2=Correct but difficult to find the term or the definition was not easy to decipher, or 3=Incorrect definition when compared to the textbook term.”. Of 459 items in the eventual sample only five were rated 3, and “the average score across all textbooks was a 1.18 demonstrating Wikipedia is an accurate source of marketing content.”

- Wikipedia’s osteosarcoma coverage assessed: An abstract published in the Journal of Bone & Joint Surgery[25] finds “that the quality of osteosarcoma-related information found in English Wikipedia is good but inferior to the patient information provided by the National Cancer Institute”. The abstract refers to a study and results that appear to be identical to the one reported in a 2010 viewpoint article in the Journal of the American Medical Informatics Association (JAMIA) (Signpost coverage).

- Wikipedia assignments for Finnish school students: A paper by three Finnish authors[26] describes course assignments to upper secondary school students (age 16–18) involving “writing articles for Wikipedia (a public wiki) and for the school’s own wiki”, in subject areas including biology, geography and Finnish history. In particular the paper reports that “a carefully planned library [visit] can help to activate students to use printed materials in their source-based writing assignments. [And that our] findings corroborate the generally held view that students tend to copy-paste and plagiarise, especially when exploiting Web sources.”

- Wikipedia as a thermodynamic system – becoming more efficient over time: A paper titled “Thermodynamic Principles in Social Collaborations”[27] (presented at this month’s Collective Intelligence 2012 conference) applies principles and concepts from Statistical mechanics to the collaboration on (the English) Wikipedia. The analogy is based on interpreting the edit count of a user as the energy level of a particle, positing a “logarithmic energy model” for edits which assumes a “decreasing effort required for a given user to make additional edits in a relatively short period of time (e.g., one month) or to a particular page”. (According to the authors this contrasts with two other theories which also explain the observed power law distribution of edit counts: The “Wikipedia editors are ‘born'” notion, which assumes that different users need to expend different amounts of energy on the same kind of edits due to “an extreme heterogeneity of preference among the potential user population”, and the “Wikipedia editors are ‘made'” notion, which sees positive or negative feedback from other users as the defining influence.) Using the analogy, the authors define the entropy, free energy, temperature, entropy efficiency and entropy reduction of an editing community and their edits during a particular timespan. They then calculate the latter two for each month in the English Wikipedia’s history from January 2002 to December 2009. They conclude that “Wikipedia has become more efficient in terms of entropy efficiency, and more ordered according to entropy reduction. The increasing power-law coefficient causes the shift of the contributions from elites to crowd. The saturation of free energy reduction ratio may cause the saturation of the active editors.” The next section finds that “entropy efficiency is correlated with the quality of the social collaboration”, and one figure is interpreted as implying “that the nature of Wikipedia is a true media of the masses, where pages produced by crowd wisdom will have higher quality and thus more readership compared to that produced by a few elites.”

- Too many docs don’t spoil the broth: Another paper[28] presented at the Collective Intelligence 2012 conference similarly found “that the number of contributors has a curvilinear relationship to information quality, more contributors improving quality but only up to a certain point” – based on an examination of 16,068 articles in the realm of the WikiProject Medicine.

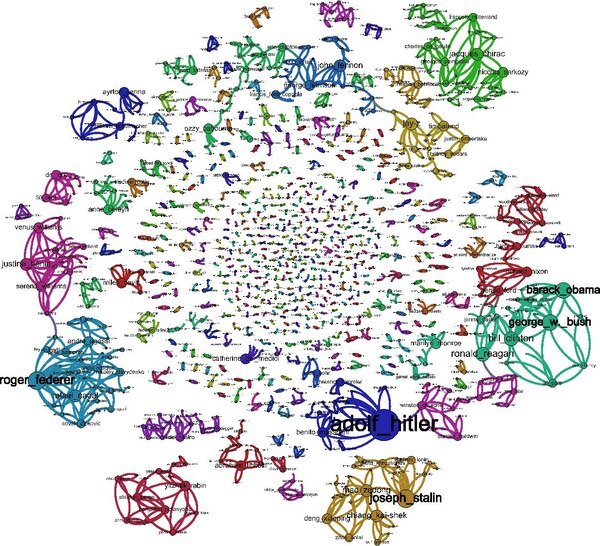

- The most influential biographies vary depending on the language/culture: Barcelona Media Foundation studied “the most influential characters” in the 15 largest language Wikipedias[29], by asking which biographies are the most linked to (“central”) from other Wikipedia biography articles. Political and artistic biographies are the most central, and the particular biographies depend on the language. They found, for instance, that Shakespeare’s biography is among the most important for Russian, Chinese, Spanish, and Dutch, but not for English. And they estimated the Jaccard similarity coefficient (similarity) between the social networks in different language editions: most similarity can be explained by language-family and geographical or historical ties. One interesting finding is that Dutch “seems to serve as a bridge between different language and cultural groups”. Some social connections are very common,and they produce a graph of the connections found in at least 13 of the language editions. The authors note that articles on people from non-Anglo-Saxon cultures may be missing if they are not known internationally, since the initial list of notable people is extracted from DBPedia. A blog post on Technology Review highlighted the fact that in the paper’s table of most connected biographies (listing the top 5 from 15 language versions), among the 75 entries “only three are women: Queen Elizabeth II, Marilyn Monroe and Margaret Thatcher” , which it interprets as one of “The Worrying Consequences of the Wikipedia Gender Gap“. (Summary at AcaWiki)

References

- ↑ Restivo, M. & van de Rijt, A. (2012). Experimental Study of Informal Rewards in Peer Production. PLoS ONE 7(3): e34358. PDF • DOI

- ↑ a b Meyer, C. M., & Gurevych, I. (2012). Wiktionary: a new rival for expert-built lexicons? Exploring the possibilities of collaborative lexicography. In S. Granger & M. Paquot (Eds.), Electronic Lexicography. Oxford: Oxford University Press. PDF

- ↑ Lepore, J. (2006). Noah’s Mark, The New Yorker, November 6, 2006, pp. 78-86. HTML

- ↑ Xiao, L., & Askin, N. (2012). Wikipedia for Academic Publishing: Advantages and Challenges. Online Information Review, 36(3), 2. Emerald Group Publishing Limited. HTML

- ↑ (2012) “Topic Pages: PLoS Computational Biology Meets Wikipedia”. PLoS Computational Biology 8 (3): e1002446. doi:10.1371/journal.pcbi.1002446.

- ↑ Baker, D. J. (2012). A Jester’s Promenade: Citations to Wikipedia in Law Reviews, 2002–2008. I/S: A Journal of Law and Policy for the Information Society, 7(2):1–44. PDF

- ↑ Anderka, M., & Stein, B. (2012). A breakdown of quality flaws in Wikipedia. Proceedings of the 2nd Joint WICOW/AIRWeb Workshop on Web Quality – WebQuality ’12 (p. 11). New York: ACM Press. DOI • PDF

- ↑ Anderka, M., Stein, B., & Lipka, N. (2011). Towards automatic quality assurance in Wikipedia. Proceedings of the 20th international conference companion on World Wide Web – WWW ’11. New York: ACM Press. DOI • PDF

- ↑ Kaltenbrunner, A., & Laniado, D. (2012). There is No Deadline – Time Evolution of Wikipedia Discussions. ArXiV. Computers and Society; Physics and Society. PDF

- ↑ Yousaf, J., Li, J., Zhang, H., & Hou, L. (2012). Exploration and Visualization of Administrator Network in Wikipedia. In: Q. Z. Sheng, G. Wang, C. S. Jensen, & G. Xu (Eds.), Web Technologies and Applications, Lecture Notes in Computer Science 7235:46-59. Berlin, Heidelberg: Springer Berlin Heidelberg. DOI

- ↑ Hattori, Y., & Nadamoto, A. (2012). Search for Minority Information from Wikipedia Based on Similarity of Majority Information. In: Q. Z. Sheng, G. Wang, C. S. Jensen, & G. Xu (Eds.) Web Technologies and Applications, Lecture Notes in Computer Science 7235:158-169. Berlin, Heidelberg: Springer Berlin Heidelberg. DOI

- ↑ Fujiwara, Y., Suzuki, Y., Konishi, Y., Nadamoto, A., Sheng, Q., Wang, G., Jensen, C., et al. (2012). Extracting Difference Information from Multilingual Wikipedia. In: Q. Z. Sheng, G. Wang, C. S. Jensen, & G. Xu (Eds.) Web Technologies and Applications, Lecture Notes in Computer Science 7235:496-503. Berlin, Heidelberg: Springer Berlin Heidelberg. DOI

- ↑ a b Halfaker, A. (2012). Kids these days: the quality of new Wikipedia editors over time. Wikimedia Foundation blog. HTML

- ↑ Ciampaglia, G. L. (2011). User participation and community formation in peer production systems. PhD Thesis, Università della Svizzera Italiana PDF

- ↑ a b Hyland, A. (2012). Comparing article quality by article class and article feedback ratings. Wikipedia. HTML

- ↑ Huggett, S. (2012). The influence of free encyclopedias on science. Research Trends, (27). HTML

- ↑ Elder, D., Westbrook, R. N., & Reilly, M. (2012). Wikipedia Lover, Not a Hater: Harnessing Wikipedia to Increase the Discoverability of Library Resources. Journal of Web Librarianship, 6(1), 32-44. Routledge. DOI

- ↑ Karkulahti, O., & Kangasharju, J. (2012). Surveying Wikipedia activity: Collaboration, commercialism, and culture. The International Conference on Information Network 2012 (pp. 384-389). IEEE. DOI

- ↑ Yasseri, T., Kornai, A., Kertész, J. (2012). A practical approach to language complexity: a Wikipedia case study. ArXiv. Computation and Language. PDF

- ↑ Graham, M. (2012). Mapping Wikipedia edits from South America. Zero Geography. HTML

- ↑ Lincoln, M. (2012). Death and Change Tracking : Wikipedia Edit Bursts. PDF

- ↑ Atzori, M., & Zaniolo, C. (2012). SWiPE: Searching wikipedia by example. Proceedings of the 21st international conference companion on World Wide Web – WWW ’12 Companion (p. 309). New York, New York, USA: ACM Press. DOI • PDF

- ↑ DiStaso, M. W. (2012). Measuring Public Relations Wikipedia Engagement: How Bright is the Rule? Public Relations Journal, 6(2) HTML

- ↑ Gray, D. M., & Peltier, J. (2012). Is Wikipedia Reliable Tool for Marketing Educators and Students? A Surprising Heck Yes! Marketing Always Evolving. 34th Annual International Collegiate Conference. PDF

- ↑ Leithner, A., Maurer-Ertl, W., Glehr, M., Friesenbichler, J., Leithner, K., & Windhager, R. (2012). Wikipedia and Osteosarcoma: An educational opportunity and professional responsibility for Emsos. Journal of Bone & Joint Surgery, BR, 94-B(SUPP XIV), 13. British Editorial Society of Bone and Joint Surgery. HTML

- ↑ Sormunen, E., Eriksson, H., & KurkipaÌa, T. (2012). Wikipedia and wikis as forums of information literacy instruction in schools. The Road to Information Literacy: Librarians as Facilitators of Learning. IFLA 2012 Congress Satellite Meeting (pp. 1-23). PDF

- ↑ Peng, H.-K., Zhang, Y., Pirolli, P., & Hogg, T. (2012). Thermodynamic Principles in Social Collaborations. ArXiV. Physics and Society. PDF

- ↑ Kane, G. C., & Ransbotham, S. (2012). Collaborative Development in Wikipedia. ArXiv. PDF

- ↑ a b Aragón, P., Kaltenbrunner, A., Laniado, D., & Volkovich, Y. (2012). Biographical Social Networks on Wikipedia – A cross-cultural study of links that made history. ArXiV. Computers and Society; Physics and Society, PDF

Wikimedia Research Newsletter

Vol: 2 • Issue: 4 • April 2012

This newletter is brought to you by the Wikimedia Research Committee and The Signpost

Subscribe: ![]() Email

Email ![]()

![]() • [archives] [signpost edition] [contribute] [research index]

• [archives] [signpost edition] [contribute] [research index]

Can you help us translate this article?

In order for this article to reach as many people as possible we would like your help. Can you translate this article to get the message out?

Start translation