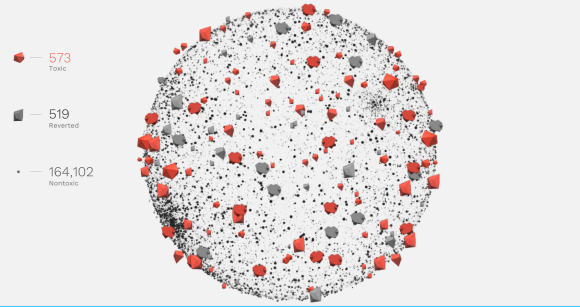

3D representation of 30 days of Wikipedia talk page revisions of which 1092 contained toxic language (shown as red if live, grey if reverted) and 164,102 were non-toxic (shown as dots). Visualization by Hoshi Ludwig, CC BY-SA 4.0.

3D representation of 30 days of Wikipedia talk page revisions of which 1092 contained toxic language (shown as red if live, grey if reverted) and 164,102 were non-toxic (shown as dots). Visualization by Hoshi Ludwig, CC BY-SA 4.0.

“What you need to understand as you are doing the ironing is that Wikipedia is no place for a woman.” –An anonymous comment on a user’s talk page, March 2015

Volunteer Wikipedia editors coordinate many of their efforts through online discussions on “talk pages” which are attached to every article and user-page on the platform. But as the above quote demonstrates, these discussions aren’t always good-faith collaboration and exchanges of ideas—they are also an avenue of harassment and other toxic behavior.

Harassment is not unique to Wikipedia; it is a pervasive issue for many online communities. A 2014 Pew survey found that 73% of internet users have witnessed online harassment and 40% have personally experienced it. To better understand how contributors to Wikimedia projects experience harassment, the Wikimedia Foundation ran an opt-in survey in 2015. About 38% of editors surveyed had experienced some form of harassment, and subsequently, over half of those contributors felt a decrease in their motivation to contribute to the Wikimedia sites in the future.

Early last year, the Wikimedia Foundation kicked off a research collaboration with Jigsaw, a technology incubator for Google’s parent company, Alphabet, to better understand the nature and impact of harassment on Wikipedia and explore technical solutions. In particular, we have been developing models for automated detection of toxic comments on users’ talk pages applying machine learning methods. We are using these models to analyze the prevalence and nature of online harassment at scale. This data will help us prototype tools to visually depict harassment, helping administrators respond.

Our initial research has focused on personal attacks, a blatant form of online harassment that usually manifests as insults, slander, obscenity, or other forms of ad-hominem attacks. To amass sufficient data for a supervised machine learning approach, we collected 100,000 comments on English Wikipedia talk pages and had 4,000 crowd-workers judge whether the comments were harassing in 1 million annotations. Each comment was rated by 10 crowd-workers whose opinions were aggregated and used to train our model.

This dataset is the largest public annotated dataset of personal attacks that we know of. In addition to this labeled set of comments, we are releasing a corpus of all 95 million user and article talk comments made between 2001-2015. Both data sets are available on FigShare, a research repository where users can share data, to support further research.

The machine learning model we developed was inspired by recent research at Yahoo in detecting abusive language. The idea is to use fragments of text extracted from Wikipedia edits and feed them into a machine learning algorithm called logistic regression. This produces a probability estimate of whether an edit is a personal attack. With testing, we found that a fully trained model achieves better performance in predicting whether an edit is a personal attack than the combined average of 3 human crowd-workers.

Prior to this work, the primary way to determine whether a comment was an attack was to have it annotated by a human, a costly and time-consuming approach that could only cover a small fraction of the 24,000 edits to discussions that occur on Wikipedia every day. Our model allows us to investigate every edit as it occurs to determine whether it is a personal attack. This also allows us to ask more complex questions around how users experience harassment. Some of the questions we were able to examine include:

- How often are attacks moderated? Only 18% of attacks were followed by a warning or a block of the offending user. Even for users who have contributed four or more attacks, moderation only occurs for 60% of those users.

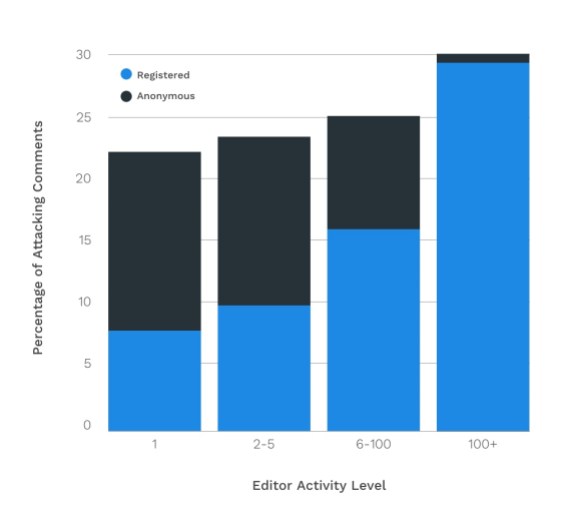

- What is the role of anonymity in personal attacks? Registered users make two-thirds (67%) of attacks on English Wikipedia, contradicting a widespread assumption that anonymous comments by unregistered contributors are the primary contributor to the problem.

- How frequent are attacks from regular vs. occasional contributors? Prolific and occasional editors are both responsible for a large proportion of attacks (see figure below). While half of all attacks come from editors who make fewer than 5 edits a year, a third come from registered users with over 100 edits a year.

Chart by Nithum Thain, CC BY-SA 4.0.

Chart by Nithum Thain, CC BY-SA 4.0.

More information on how we performed these analyses and other questions that we investigated can be found in our research paper:

Wulczyn, E., Thain, N., Dixon, L. (2017). Ex Machina: Personal Attacks Seen at Scale (to appear in Proceedings of the 26th International Conference on World Wide Web – WWW 2017).

While we are excited about the contributions of this work, it is just a small step toward a deeper understanding of online harassment and finding ways to mitigate it. The limits of this research include that it only looked at egregious and easily identifiable personal attacks. The data is only in English, so the model we built only understands English. The model does little for other forms of harassment on Wikipedia; for example, it is not very good at identifying threats. There are also important things we do not yet know about our model and data; for example, are there unintended biases that were inadvertently learned from the crowdsourced ratings? We hope to explore these issues by collaborating further on this research.

We also hope that collaborating on these machine-learning methods might help online communities better monitor and address harassment, leading to more inclusive discussions. These methods also enable new ways for researchers to tackle many more questions about harassment at scale—including the impact of harassment on editor retention and whether certain groups are disproportionately silenced by harassers.

Tackling online harassment, like defining it, is a community effort. If you’re interested or want to help, you can get in touch with us and learn more about the project on our wiki page. Help us label more comments via our wikilabels campaign.

Ellery Wulczyn, Data Scientist, Wikimedia Foundation

Dario Taraborelli, Head of Research, Wikimedia Foundation

Nithum Thain, Research Fellow, Jigsaw

Lucas Dixon, Chief Research Scientist, Jigsaw

Editor’s note: This post has been updated to clarify potential misunderstandings in the meaning of “anonymity” under “What is the role of anonymity in personal attacks?”

Can you help us translate this article?

In order for this article to reach as many people as possible we would like your help. Can you translate this article to get the message out?

Start translation