“Wiki” used to mean fast…

The creator of the wiki, Ward Cunningham, wanted to make it fast and easy to edit web pages. Cunningham named his software after a Hawaiian word for “quick.” That’s why the Wikimedia Foundation is happy to report that editing Wikipedia is now twice as quick. Over the last six months we deployed a new technology that speeds up MediaWiki, Wikipedia’s underlying PHP-based code. HipHop Virtual Machine, or HHVM, reduces the median page-saving time for editors from about 7.5 seconds to 2.5 seconds, and the mean page-saving time from about 6 to 3 seconds. Below, I’ll explain the technical background for HHVM on MediaWiki and some of the far-reaching benefits of this change that will go beyond the recent performance gains.

How keeping Wikipedia fast meant slowing Wikipedia down

Nearly half a billion people access Wikipedia and the other Wikimedia sites every month. Yet the Wikimedia Foundation has only a fraction of the budget and staff of other top websites. So how does it serve users at a reasonable speed? Since the early years of Wikipedia the answer has been aggressive caching: Placing static copies of Wikipedia pages on more than a hundred different servers, each capable of serving thousands of readers per second, often located in a data center closer to the user.

This strategy allowed Wikipedia to scale from a trickle of users to tens of thousands of pageviews per second, while keeping expenses for servers and data centers low. The first Squid cache servers were set up in 2004 by then-volunteer developer Gabriel Wicke. Today a Principal Software Engineer at the Wikimedia Foundation, Gabriel still recalls watching the newly installed Squids easily handle the first mention of Wikipedia on German prime-time TV by news anchor Ulrich Wickert, as request rates climbed from 20/s to 1500/s.

While caching has been an elegant solution, it cannot address the needs of all users. Between 2-4% of requests can’t be served via our caches, and there are users who always need to be served by our main (uncached) application servers. This includes anyone who logs into an account, as they see a customized version of Wikipedia pages that can’t be served as a static cached copy, as well as all web requests involved when a user is editing a page.

This means that the user experience for Wikipedia editors hinges directly on the performance of the MediaWiki app servers. These users are the lifeblood of any wiki — they are the people who contribute and curate content, as well as the users most likely to stay logged in while reading. And unlike the vast majority of visitors who see the fast, cached version of the site, our heaviest users and contributors have gradually experienced a slowdown in Wikipedia’s performance.

Speed matters

MediaWiki is written in PHP, a dynamic, loosely-typed language that has some natural advantages for a large scale website running on an open source software platform. PHP is a flexible language, suited to rapid prototyping, and has a low barrier of entry, making it relatively easy for novice developers to contribute. At the same time, PHP also has some disadvantages, including known performance issues.

Adding more servers alone would not have addressed this problem. As a single-threaded language, PHP cannot be parallelized — that is, a task that takes up a whole CPU core can’t be sped up by spreading it over multiple cores. At the same time, the clock speed of single CPU cores has not improved significantly over the past decade. As MediaWiki has grown more sophisticated with new editing features, and Wikipedia’s content has grown increasingly complex, editing performance has slowed down.

It’s been shown that even small delays in response time on websites (e.g. half of a second) can result in sharp declines in user retention. As a result, popular sites such as Google and Facebook invest heavily in site performance initiatives to ensure their continued popularity. Formerly popular sites such as Friendster suffered due to a lack of attention to these issues. It is a priority of the Wikimedia Foundation to ensure Wikipedia and its sister projects are usable and responsive in order to serve their editor communities and sustain their mission.

As performance engineer for the Wikimedia Foundation, I once asked Ward Cunningham about how the “quickness” in the term “wiki” relates to the speed of the wiki software itself, and goes beyond that to mean the speed with which someone can translate intent to action. He replied:

| “ | Yes, the “quickness” I felt had everything to do with expressing myself. I had been authoring pages in vi [a fast text editor] writing rather plain html to be served directly by httpd [a bare-bones web server]. Even with this simplicity it was a pain to split a thought into two pages without breaking my train of thought. | ” |

Ward went on to explain how, after making the first 15 or 20 pages with his new invention, he arrived on the name “Wiki Wiki Web” – the very fast web.

How HHVM addresses this problem

Fortunately, Wikipedia is not the only top-ten website written in PHP facing these legacy challenges. The largest of these, Facebook, has tackled this PHP performance issue by building an innovative and effective solution that they call HipHop Virtual Machine (HHVM), an alternative implementation of PHP that runs much faster than previous solutions, including the standard Zend interpreter.

PHP is a dynamic, interpreted language, so it has the inherent performance disadvantage all interpreter languages have when compared to compiled languages such as C. HHVM is able to extract high performance from PHP code by acting as a just-in-time (JIT) compiler, optimizing the compiled code while the program is already running. The basic assumption guiding HHVM’s JIT is that while PHP is a dynamically typed language, the types flowing through PHP programs aren’t very dynamic in practice. HHVM observes the types present at runtime and generates machine code optimized to operate on these types.

HHVM still fulfills the role of a PHP runtime interpreter, serving requests immediately upon starting up, without pre-compiling code. But while running, HHVM analyzes the code in order to find opportunities for optimization. The first few times a piece of code is executed, HHVM doesn’t optimize at all; it executes it in the most naive way possible. But as it’s doing that, it keeps a count of the number of times it has been asked to execute various bits of code, and gradually it builds up a profile of the “hot” (frequently invoked and expensive to execute) code paths in a program. This way, it can be strategic about which code paths to study and optimize.

Facebook publishes the code of HHVM under a free software license, and encourages reuse by other sites. MediaWiki was one of the first applications they showcased as possible use case.

What we have already gained by switching to HHVM

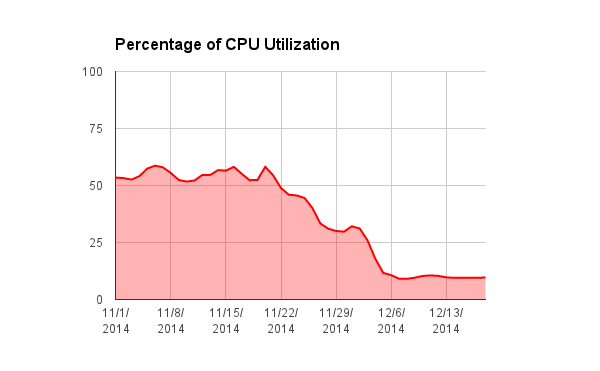

Following extensive preparations, all our app servers are now running MediaWiki on HHVM instead of Zend. The performance gains we have observed during this gradual conversion in November and December 2014 include the following:

- The CPU load on our app servers has dropped drastically, from about 50% to 10%. Our TechOps team member Giuseppe Lavagetto reports that we have already been able to slash our planned purchases for new MediaWiki application servers substantially, compared to what would have been necessary without HHVM.

- The mean page save time has been reduced from ~6s to ~3s (this is the time from the user hitting ‘submit’ or ‘preview’ until the server has completed processing the edit and begins to send the updated page contents to the user). Given that Wikimedia projects saw more than 100 million edits in 2014, this means saving a decade’s worth of latency every year from now on.

- The median page save time fell from ~7.5s to ~2.5s.

- The average page load time for logged-in users (i.e. the time it takes to generate a Wikipedia article for viewing) dropped from about 1.3s to 0.9s.

How we made the switch

The potential benefit of HHVM for MediaWiki have been obvious for some time. In May 2013, WMF developers first met with Facebook’s team to discuss possible use of an earlier incarnation called “HipHop,” which was implemented as a full up-front compiler instead of a JIT. There was already hope that this would make our servers run 3-5 faster, but we were concerned about fidelity (i.e. whether MediaWiki would produce the exact same output after the conversion) in some edge cases.

We resumed work on the conversion in earnest this year, after a new version of HHVM had come out. Resolving fidelity issues required some effort, but it turned out well. After we had already been working on the conversion for several months, Facebook approached us offering to donate some developer time to help with this task. Facebook developer Brett Simmers spent one month full-time with our team providing very valuable assistance, and Facebook also offered to make themselves available for other issues we might encounter.

Overall, the transition took about 6 months, with most of the work falling into one of the following three areas:

- Fidelity: Overall, HHVM’s compatibility with PHP is quite good, but with a code base as large as ours, there were of course edge cases. Fixing these small issues required a substantial amount of work.

- Migrate our PHP extensions: MediaWiki uses three PHP extensions (not to be confused with extensions to MediaWiki itself) which are written in C: A sandbox for Lua (the scripting language editors can use to automate e.g. templates), Wikidiff2 (providing fast diffing between different versions of wiki pages), and “fast string search” (for fast substring matching, replacing the default PHP implementation which does not support codepoints well and had poor performance for non-Latin languages. This is separate from our general user-facing search engine which serves other purposes). These three were tightly integrated with the Zend PHP framework, and the existing rudimentary compatibility layer offered by HHVM was not sufficient to run them out of the box. In the process, WMF Lead Platform Architect Tim Starling thoroughly rewrote the compatibility layer for HHVM, contributing about 20,000 lines of code upstream.

- Another category of migration work was to update and convert many different server configuration tools such as Puppet scripts that were tightly coupled to the old PHP environment and needed to be updated. This involved cleanup up tons of accumulated cruft, and also upgrading our servers from Ubuntu Precise to Ubuntu Trusty.

Once we were ready to roll out the converted software to live usage, we started switching over servers one by one, initially provisioning a separate pool of HHVM servers with its own cache. To allow editors to test for any remaining bugs, we offered access to the HHVM servers as an opt-in Beta feature for a while, tracking edits made via HHVM with a special edit tag. Wikipedians uncovered quite a few remaining edge cases that were fixed before the main rollout. We then slowly increased the ratio of overall (uncached) traffic by switching over servers one by one, until all users were converted to via HHVM in a final sprint at the beginning of December.

Other advantages of HHVM, and its future potential

The speedups we have observed so far are just the beginning. We expect many other benefits resulting from the conversion to HHVM, for example:

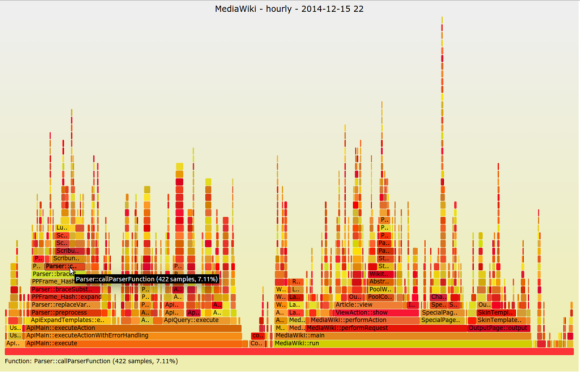

- HHVM offers sophisticated observability tools, which make it easier to spot bottlenecks and identify opportunities for eliminating unnecessary work. These tools allow us to optimize the performance of MediaWiki much more than we have been able to do in the past. This “flame graph” is a first example of that: It visualizes which parts of the code consume most CPU time, keeping track of which other parts they have been called from. The data that is used to generate the graph is supplied by an HHVM extension called ‘Xenon’, which snapshots server activity at regular intervals, showing which code is on-CPU. The design of Xenon is a great example of how HHVM was designed from the ground up to power a complex application deployed to a large cluster of computers (as opposed to Zend, whose architecture reflects its origins in the 1990s web).

- HHVM offers static program analysis (inferring from the beginning what sort of values a variable can assume – e.g. strings, integers, or floating point numbers). This enables far more rigorous verification before deploying code, reducing errors and will also help our existing quality control mechanisms.

- In addition to PHP, HHVM can also execute code written in Hack, a language developed by Facebook. Hack is syntactically similar to PHP, but it adds some powerful features that are not available in PHP itself, like asynchronous processing. We are not using Hack in production yet, but we are following the development of the language with tremendous interest and excitement.

- The HHVM team has already included some MediaWiki parsing tasks into its performance regression tests, meaning that upstream changes which would deteriorate MediaWiki’s performance can be detected and prevented even before a new HHVM version is released and deployed by us. This was not the case in the old PHP framework we have been using before.

- More generally, we are confident in having found a strong, capable upstream partner in Facebook, who is sensitive to our needs as reusers, and is committed to maintaining and improving HHVM for years to come. In turn, we started to contribute back upstream. The most substantial contribution made by a Wikimedia developer is the above mentioned major rewrite of the Zend Compatibility Layer, which allows Zend PHP extensions to be used with HHVM. These improvements by WMF Lead Platform Architect Tim Starling have been highlighted in the release notes for HHVM 3.1.0.

- With HHVM, we have the capacity to serve dynamic content to a far greater subset of visitors than before. In the big picture, this may allow us to dissolve the invisible distinction between passive and active Wikipedia users further, enabling better implementation of customization features like a list of favorite articles or various “microcontribution” features that draw formerly passive readers into participating.

Ori Livneh

Principal Software Engineer

Wikimedia Foundation

Can you help us translate this article?

In order for this article to reach as many people as possible we would like your help. Can you translate this article to get the message out?

Start translation